Blog

Improving Data Quality:

Practical guidance for better results

Many companies are concerned about the quality of their data. While the importance of data as the foundation for key business decisions is growing, the problems associated with optimally managing, transforming and delivering this data are increasing as the volume of data continues to rise. In particular, this is due to the quality issues that plague many organizations. Inconsistent or inaccurate data, duplicates and uncertainties about data governance not only lead to decision risks and ineffective processes, but also cost a lot of time to improve data quality – often manually – again and again.

How is data quality measured?

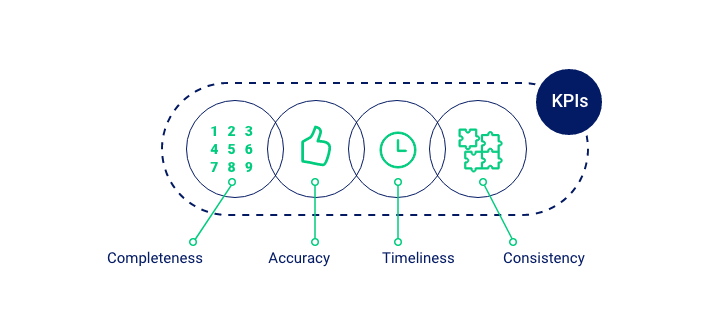

Perfect data quality looks different for each process, data recipient and objective. Management reporting requires different data in a less granular form than publishing to global data pools, where high-level quality standards are required. Therefore, it is important to define the right data quality for each purpose first. The most important KPIs are:

Completeness: A common quality problem is the incompleteness of data sets – i.e., missing attribute values. When implementing technology solutions, the specification of a data model is an important task, as it determines from the very beginning what a perfect data set must look like in order to optimally serve each data recipient and each process. Correspondingly, data maintenance must ensure that data sets are fully enriched and that information requirements are covered with them.

Accuracy: An essential quality criterion is the accuracy of the information. Only information that is 100 percent reliable and objective should find its way into the company’s central data storage. Any inaccuracy or error distorts the reality reflected by the data. Therefore, compliance with this quality criteria is an important prerequisite for the transformation of companies into data-driven organizations.

Timeliness: Closely linked to the accuracy of data is its timeliness. Continuous data and content services are necessary to ensure that no outdated data is used. If there are changes to a product or if certain assortments are no longer offered, then this must be updated immediately in the respective master system so that these adjustments are taken into account accordingly in the downstream systems and processes.

Consistency: Not only does data have to be correct and complete – its design specifications must also be strictly adhered to in order to ensure consistent data management and make data operable in the first place. When defining the data model, the attributes and the corresponding value spaces are also specified. Within these rules, the data sets must be specified so that the processes and insights built on them are accurate and error-free.

The role of system architecture

As already indicated, the respective leading systems are responsible for defining the right data quality and for keeping data up-to-date, consistent and accurate. This implies a very clear division of tasks and responsibilities in the system landscape – which may seem obvious, but is still difficult to implement in many cases. Particularly in complex organizational structures, it is frequently the case that each department or country organization has its own systems in use. Some data is even stored locally in Excel spreadsheets, and central access to important company data is not possible at all.

This is why the first step towards high-quality data is the definition and implementation of a consolidated system landscape, which forms the basis for mapping all business processes. It is important to consider the entire digital value chain and thus the core disciplines of PIM and DAM as the basic technologies for all product content processes, from the creation and preparation to the distribution of high-quality product messages.

The role of data processes

While a consolidated and clearly defined system landscape is an important prerequisite for data quality, appropriate processes, testing and validation rules are needed in operations and in everyday work with data to enforce data quality specifications.

Automation plays a key role in helping employees control the complexity of creating and managing product content while delivering the required quality at all times – across all channels. Appropriate rules could, for example, alert when product images do not meet the required formats in the desired output channel. Validation processes can also inform the responsible employees when certain data records are not complete or duplicates have been found that need to be resolved manually.

When creating data records, there is another set of checking mechanisms that help contributors to maintain the quality of the data created from the very beginning. The systems can define a certain value range for filling the attributes and prevent inadmissible number formats or descriptions in order to ensure data consistency and avoid costly cleanup at a later stage.

For such processes to be established, it is important to consider what cross-system data flows will look like at the beginning, who will be involved, and who will be responsible for the quality of the data. In addition, it is advisable to consider not only the current output channels and organizational structures, but also to keep in mind that changes in the process landscape might occur in the future.

Data Governance

These are all very complex tasks – the discipline ensuring the defined rules, responsibilities and audit processes are enforced is called data governance. It is the most important tool for companies that want to improve their data quality and consequently secure their operational efficiency in the long term. Ideally, the rules defined in data governance are set out in a comprehensive document and made available centrally to all stakeholders.

mediacockpit supports companies in providing the appropriate data quality at every touchpoint and for every data recipient. Our solution focuses on managing and providing optimal product and media data for the respective requirements, which are perfectly mapped by a generic data model. Automations and intelligent workflows ensure that quality rules are adhered to without compromise.

Request your comprehensive data governance guide! Learn how to establish effective data governance practices in your organization. Our guide covers best practices, rules, and responsibilities for ensuring high-quality data across your systems.

Accomplish more together

We believe in the value of collaboration and exchange. This applies both to our customer projects, from which we generate many valuable insights for our product development, and to our growing partner network, with an extensive range of which we support our customers in their digitization.